By: Blake Thompson

To keep pace with maps for machines, we are developing a new version of the Vector Tiles Specification, the open standard powering our HD Vector Maps. We’re scoping this specification — known as Vector Tile 3 (VT3) — in the open on Github. Don’t miss my talk at Locate, where I’ll walk through the updated format and how it can radically save bandwidth for streaming data to devices, reducing storage size for larger area coverage offline.

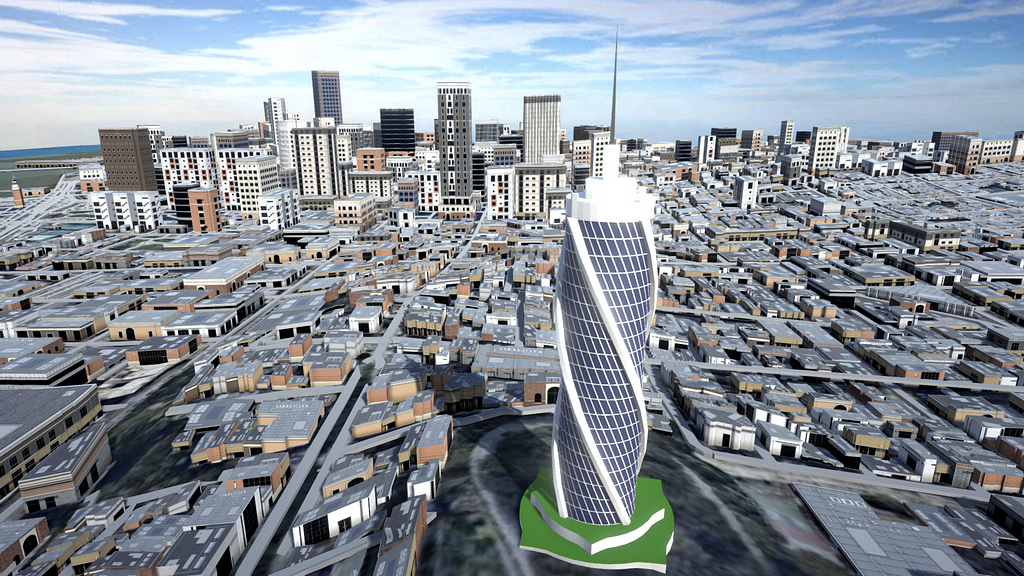

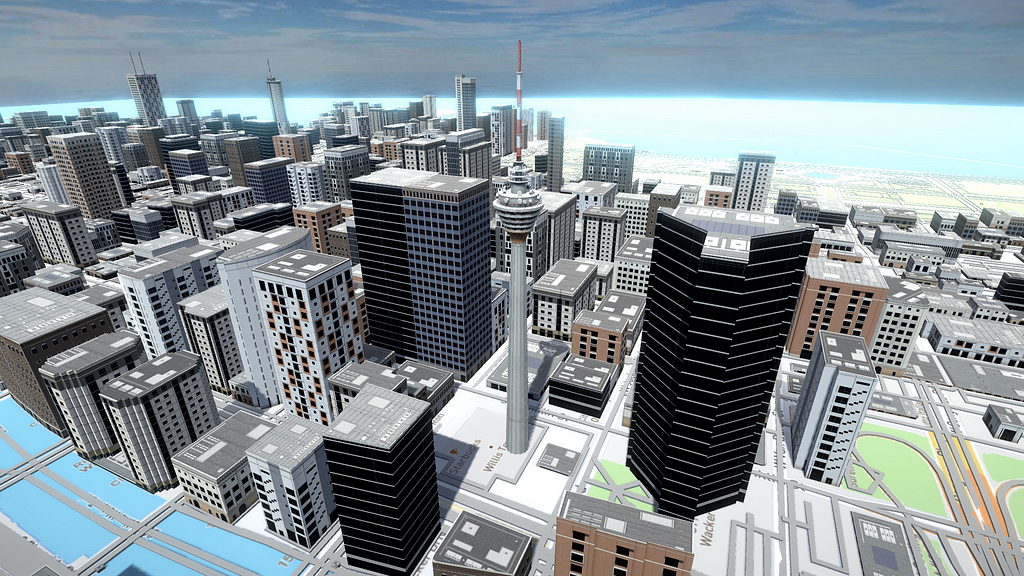

Maps used by machines will play a pivotal role in an entirely new world of robots and automation. So what kind of maps do the robots need? How are maps for robots built? Autonomous vehicles, drones, and AR applications need HD Vector Maps to understand the world around us, and that data needs millimeter accuracy, precision, and the ability to update live as the world changes.

With Vector Tiles, vector data is tiled in small pieces, distributed with low latency around the globe, and it can receive partial updates in real-time as the road network gets smarter. Basically, VT3 brings Snapchat level scale for HD automotive maps, giving your fleet the latest up-to-date maps in the fastest way possible, anywhere in the world.

Improved Metadata for HD Vector Maps

Vector Tiles contain not only the information on where a feature lies, they also contain information that describes what that feature represents. This is commonly known as metadata, and Vector Tiles today can only store it in a simple “key and value” system.

{"road_name": "Main Street",

"other_name": "Fred Road",

"speed_limit": 50,

"lanes": 3

}

To better organize this data, the VT3 specification introduces new maps and arrays for the metadata.

{"road_name" : {"en": ["Main Street", "Fred Road"],

"fr": ["Rue Principale", "Fred Road"]

},

"speed_limit": 50,

"lanes": 3

}

Additionally, we are working to make metadata even more compressed. All together this will result in smaller Vector Tiles and a more developer friendly experience.

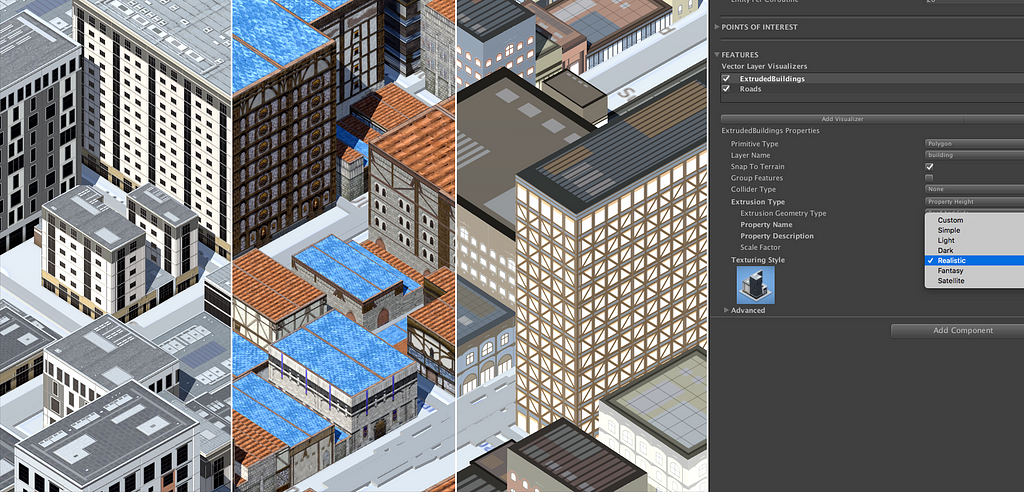

3D Data

Maps for machines and AR/VR depend on data that models 3D space. VT3 will support points and lines in full 3D. The amount of 3D data from sensors continues to grow as LIDAR becomes cheaper and more pervasive. LIDAR data is typically very large in size and is rarely displayed in a web client, making it an ideal source as we continue to test VT3 in our products.

Below is a prototype for VT3 using our web mapping library, Mapbox GL JS. It contains LIDAR data collected by the city of Washington D.C that I colorized using our aerial imagery.

Don’t miss my talk at Locate. You can learn more about the VT3 specification and how to contribute in the open repo on Github. Reach out to our team with any questions or ask me on Twitter, @flippmoke.

HD Vector Maps Open Standard was originally published in Points of interest on Medium, where people are continuing the conversation by highlighting and responding to this story.